A paradox: we dream of infinite possibilities but, when faced with them, we find ourselves paralyzed1. When everything's possible you can’t even start to reason about what comes next. Combinatorial explosion and uncertainty determine the horizon of our imagination. Even with 1000x more thoughts-per-second, you’d still need to decide what to think about… and you still might be wrong about it.

If you can recall every past moment with perfect precision, how do you decide what to recall? It turns out our brains are solving an unavoidable problem, no matter how smart you are:

Massive Memory → how to filter and retrieve?

Massive Compute → what should we compute?

Computational irreducibility implies there are no shortcuts when it comes to predicting the future. Our brains don't (just) predict many futures and pick one - they do something far more sophisticated and dynamic. At every moment, your entire being maintains an evolving map of what matters, when it matters, why, and how… you get the idea.

What matters?

As uncomfortable as it may be, “intelligence” is not that useful without judgment, without reasonability, without rationality2.

Quite literally, thinking doesn’t matter without a notion of salience:

Salience: the quality of being important to or connected with what is happening or being discussed

Much of what we consider reasoning isn’t just pure logic, it's knowing when/where/why/how to apply logic. To make use of logic, we must first choose the salient axioms to begin logical reasoning from.

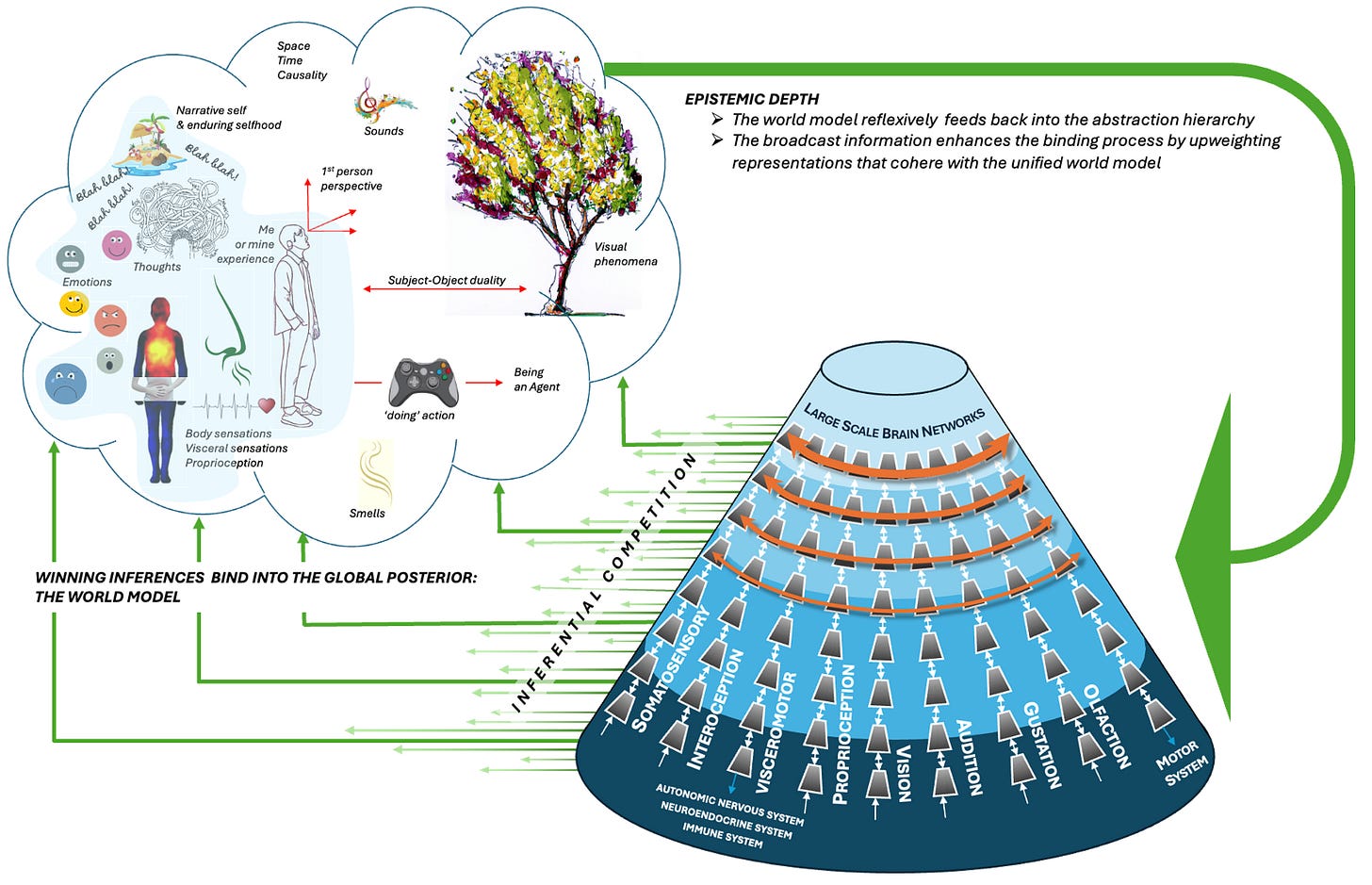

Similarly, to remember something you must intentionally ignore most of your memory3. What if our limited scope of attention and working memory is an evolved defense against overthink? This implies that the much of the “work” of the brain is relevance realization - filtering the infinite down to the actionable, based on current resources and context4.

What is this filtering mechanism? It’s the map I mentioned earlier: your Salience Landscape.

Uncertainty, Curiosity

Salience points us to what we know but also to what we could know. Curiosity is what we call the felt-sensation of salience, when aimed at uncertainty in our world-model5.

Whatever process "computes" salience seems to happen in subconscious, pre-linguistic domains. Our interface to it is the feeling of uncertainty and how we respond to it. It’s this felt process, in partnership with logical reasoning that gives humans our unique skills: systematic precision applied at exactly the right (subjectively determined) moment.

How?

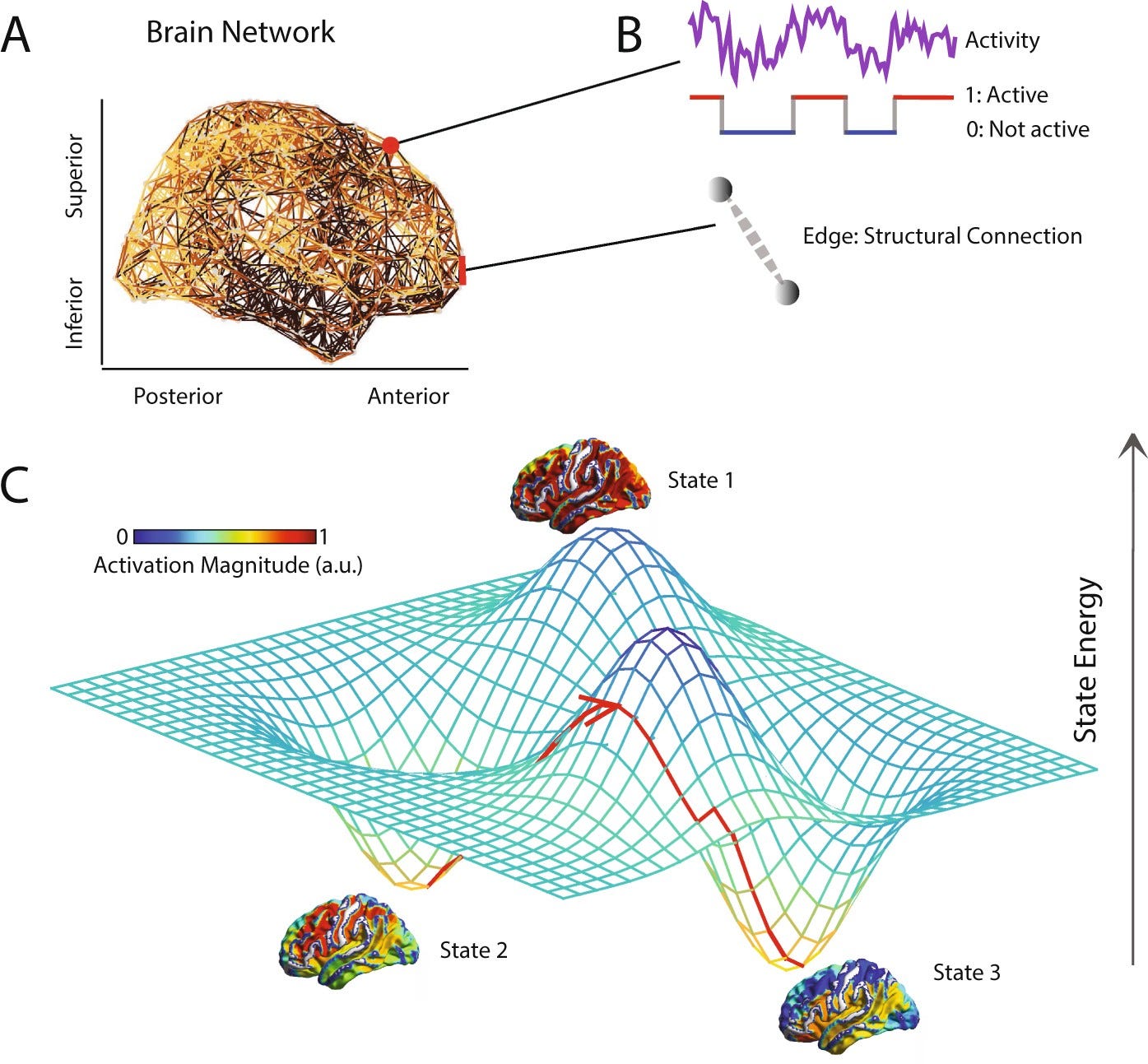

When lightning strikes it explores many paths, but not all possible paths, and then one is “chosen” without any central co-ordination. Why that path? The local structure (of the air) determines the global dynamics (path)6. In the same way: the local structure of your mind determines what spontaneously occurs to your “global” self.

That local structure is, again, our salience landscape. Maintained by the feedback loop between logic and vibes. When our predictions fail and we learn new information it results in "pockets of uncertainty" on the landscape, prompting our curiosity to explore new solutions. This process is inherently biased and propagates bias, but we can employ that bias skilfully. By intentionally curating the experiences in your life, you form a unique landscape and ability to navigate it. Think of the (slightly tired) Picasso napkin story:

"it took 40 me years to draw that in 5 minutes"

This kind of ability is produced by skillful means, biasing your experience to evolve a personal salience landscape. This personal landscape is the “self”, it coordinates and choreographs the dance between modes of thought7.

The unifying goal of this self is to avoid self-deception8. That is: perceive reality and the self’s interaction with reality 'correctly' such that predicted and intended outcomes match actual outcomes in a tight feedback loop.

Self-development: Learn as much as possible without deceiving yourself.

What are we missing?

In practice, it’s not so easy to avoid self-deception. For example, the tech industry claims to love “disruption” but responds to the invention of AI by doing much more of exactly the same thing, faster. There’s inertia to our existing ways of thinking. On some level we have to force recalibration by incessantly asking "what's newly possible?" and “what’s no longer worth doing?”9

If the “glorious AI future” means that AI handles logic, humans need to get much better at salience landscaping to support it. This isn't just about AI or programmers or creatives in general - it's how all rational people can improve their thinking and retain agency.

The meta-skill is learning to care about how you are caring, improving the quality of your attention itself. When applied correctly, this is effortless. It’s possible to adopt perspective(s) that naturally reduce self-deceptive patterns over time10.

What is "better"?

To me, the deeper question yet is: mechanistically, how do we know what's salient? What are the “physics” of salience? Can we build tools to aid salience landscaping?11

The challenge is clear: at the level of self-perception, humans operate on baseless, recursive objectives12: "I want to flourish", “I want to get better”, “I want to survive” - infinitely open-ended pursuits, with no fixed definition or completion criteria. Somehow, our brains are running evolving algorithms to solve evolving problems defined using evolving symbols with evolving definitions - it’s all mutually recursive. Somehow, this seems deeply related to consciousness and the ego.

Our limits on prediction (due to computational irreducibility) are analogous to assembly theory: the only way to produce DNA was (approximately) the history of the universe before it. Complex structures, like a salience landscape for a dynamic objective, can only be produced by the interaction of simpler structures over time.

The sense of self is autopoietic, it curates and creates itself, endlessly pursuing a "better" definition of salience. That self learns from experience, then, reflects on its own structure and, in turn, models dynamics of our salience landscape in the landscape itself13.

This is niche construction at the personality level. We endlessly remodel our internal reality and try to live within it, such that everything "fits". In the same way that organisms create their own niche as they colonize it, our thought patterns construct their own niche - their interaction forming our salience landscape:

The process of "salience landscaping" is not precise, it’s more like tending to a wild garden. It grows on its own. But… can we do it “better”? Can we study the ecology and act as caretakers, not designers, of our selves? Yes, but it’s not easy to put into words. It can only be known tacitly.

Understanding your personal sense of salience and how it evolves through experience is the only way to preserve agency when interacting with modern culture, media and technology.

Could technology aid us in that process, without corrupting it?

✌️ Ben

Stuff I’ve been thinking about

This is your sign to listen to The Dillinger Escape Plan

There may be many (neuro)diverse strategies for this

Yeah, nerdy stuff. Many non-human animals also demonstrate curiosity for objects in their environment.

Obviously one compelling framing is: right brain handles uncertainty, left brain handles logic - magic happens in their collision

It's unpleasant and effortful to re-think our salience landscape, which is why we still argue about programming languages

I think so, I am trying

Until someone first identified the pattern of “the wind” we couldn’t actually “notice” it

So great to see you’re still exploring this space.